Weapon Balancing in Modern Games

Weapon balancing is one of the most critical tasks in game design. Whether a title is a fast paced competitive shooter or a sprawling role playing game, the way weapons feel and interact shapes player experience and long term retention. In this article we will explore core principles of weapon balancing, practical workflows development teams use, measurement metrics that matter, common pitfalls to avoid and how communication with players can make or break a balance cycle.

Why weapon balancing matters

When weapon balancing is done well players feel rewarded for skillful play. Choices matter and a diverse set of viable tools encourages creativity and long term engagement. When balancing fails a single option can dominate the meta or a tool can become unusable which leads to frustration and churn. This is why studios invest in analytics, tuned playtests and careful patch work aimed at keeping the experience fair and fresh.

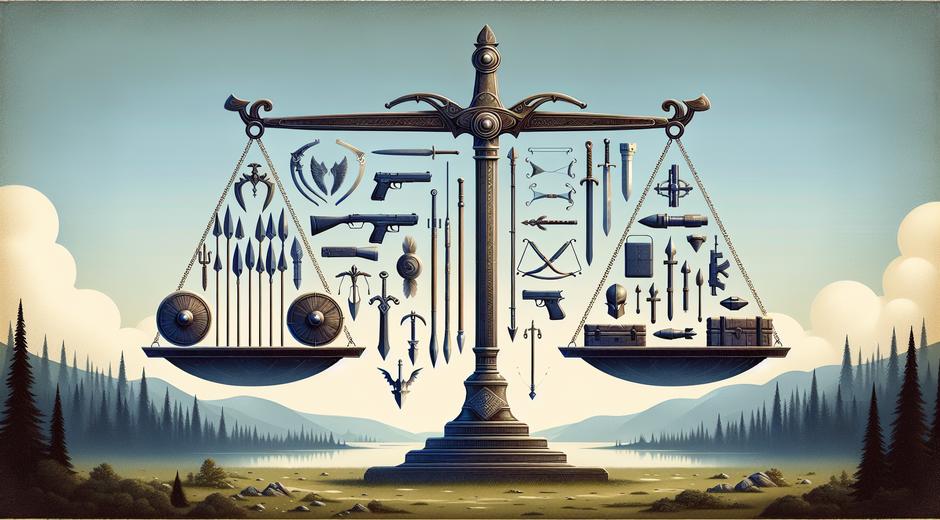

Design principles for weapon balancing

There are a few core principles that guide most weapon balancing efforts. First make sure each weapon offers a clear trade off. If a gun has very high damage it should be counter weighted by lower rate of fire or worse accuracy. Second aim for clarity in how each weapon works so that skilled players can reliably predict outcomes. Third preserve distinct roles so that choices feel meaningful rather than redundant. Finally respect the learning curve by making the most powerful options require skill to use consistently.

Common metrics used to measure weapon performance

Data driven design is central to modern weapon balancing. Teams track metrics such as win rate by weapon, pick rate, damage per minute and kill death ratio across player skill bands. Match level metrics like time to first kill or average engagement distance reveal context for weapon performance. Heat maps and shot tracing data help designers understand whether accuracy or positioning is the underlying issue. Qualitative metrics gathered from focused playtests complete the picture by surfacing player perception which pure numbers can miss.

Iterative balancing workflows

A robust balancing workflow moves from hypothesis to test to validate. Start with a clear hypothesis for why a weapon is under performing or over performing. Then implement a focused change that targets the core issue while minimizing knock on effects. Use an internal build or a small scale public test to collect targeted data. After evaluating results the team either ships the change or pivots. Iteration must be fast enough to respond to the live game but measured enough to avoid swinging the meta wildly with every patch.

Playtesting strategies that improve results

Diverse playtesting is essential. Use controlled lab tests to isolate variables and large scale beta tests to see real world interactions. Balance teams should include both expert players who push systems to extremes and casual players who reveal usability problems. Cross platform testing ensures differences in input methods do not create imbalances. Recording sessions and collecting detailed telemetry lets designers replay unusual situations and find root causes faster.

Handling competitive environments and esports

Competitive titles require a special level of discipline. In esports a single element can define a tournament meta for months. Balance decisions here need consultation with pro players and tournament organizers. When changes are necessary communicate timing and intent clearly to the scene. Often developers will stabilize a build for major events or provide alternative rule sets for competitive play so that the high level scene can develop independently while keeping the casual player base engaged.

Case study approaches without naming names

Studios often try different approaches when tackling weapon balancing. One common pattern is the slow tune where small incremental tweaks are applied across many builds. This reduces risk but can frustrate players who expect more decisive action. Another pattern is the surgical patch where a single large change is applied to one problematic weapon while preserving others. This is effective when a particular tool breaks multiple systems. Successful teams combine data with community feedback and avoid making changes driven by loud anecdotes alone.

Balancing in live service games

Live service games require ongoing balancing as new weapons and mechanics are added. Each new addition can shift the power curve and change player behavior. To manage this teams use feature flags, progressive rollouts and targeted telemetry to understand the impact. Communication is crucial. Explain the design intent for new weapons and publish notes that describe the data you will monitor. Transparency helps players understand that changes are not arbitrary and reduces hostility when adjustments follow.

Psychology of player perception

Players do not only judge weapons by raw effectiveness. Visual and audio feedback shapes perceived power. A gun that sounds punchy and has dramatic hit effects will feel stronger than a more effective tool with muted feedback. Tuning aesthetic feedback can be a low risk way to address perception gaps while you work on underlying numbers. But use this approach carefully to avoid creating mismatches between feel and actual performance that could harm trust.

Anti abuse and edge cases

Balancing must account for unintended synergies that appear in emergent play. Combinations of weapons with certain movement options or environmental interactions can create abuse cases. Keeping a watchful eye on outlier matches and using automated anomaly detection helps catch these early. When addressing these issues consider whether the root problem is a weapon stat or a system interaction. Fixing the underlying system often yields more robust results than repeatedly nerfing individual tools.

Community engagement and patch transparency

How you communicate changes matters as much as the changes themselves. Publish clear patch notes that explain why adjustments were made and what metrics guided the decision. Provide a roadmap for future work and invite informed feedback through structured channels like developer led forums and surveyed playtests. A transparent approach builds goodwill and makes the player base a partner in the balancing process rather than an adversary.

Practical tips for small teams

Smaller teams can still achieve great balance with limited resources. Focus on the most played weapons first, collect high level telemetry that covers the core metrics and run frequent short playtests. Use a small group of committed testers from your community to broaden coverage without large overhead. When in doubt simplify. Fewer moving parts reduce the risk of complex interactions and make problems easier to diagnose.

Wrapping up

Weapon balancing is both a science and an art. It requires solid data and careful experiments but also an appreciation for player psychology and game feel. By combining clear principles with an iterative workflow and strong communication you can keep weapons engaging and the meta healthy. For broader coverage on game design tools and industry trends visit gamingnewshead.com and if you are a parent looking for advice on managing play time and content choices check out CoolParentingTips.com.